MOSNet: Deep Learning based Objective Assessment for Voice Conversion

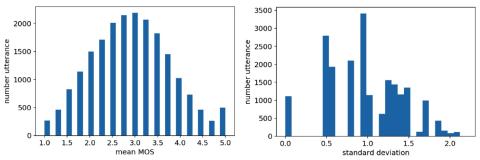

Existing objective evaluation metrics for voice conversion (VC) are not always correlated with human perception. Therefore, training VC models with such criteria may not effectively improve naturalness and similarity of converted speech. In this paper, we propose deep learning-based assessment models to predict human ratings of converted speech. We adopt the convolutional and recurrent neural network models to build a mean opinion score (MOS) predictor, termed as MOSNet. The proposed models are tested on large-scale listening test results of the Voice Conversion Challenge (VCC) 2018. Experimen...