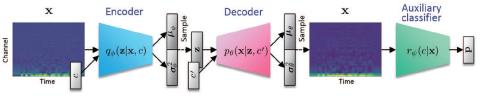

This paper proposes a non-parallel many-to-many voice conversion (VC) method using a variant of the conditional variational autoencoder (VAE) called an auxiliary classifier VAE (ACVAE). The proposed method has three key features. First, it adopts fully convolutional architectures to construct the encoder and decoder networks so that the networks can learn conversion rules that capture time dependencies in the acoustic feature sequences of source and target speech. Second, it uses an information-theoretic regularization for the model training to ensure that the information in the attribute class label will not be lost in the conversion process. With regular CVAEs, the encoder and decoder are free to ignore the attribute class label input. This can be problematic since in such a situation, the attribute class label will have little effect on controlling the voice characteristics of input speech at test time. Such situations can be avoided by introducing an auxiliary classifier and training the encoder and decoder so that the attribute classes of the decoder outputs are correctly predicted by the classifier. Third, it avoids producing buzzy-sounding speech at test time by simply transplanting the spectral details of the input speech into its converted version. Subjective evaluation experiments revealed that this simple method worked reasonably well in a non-parallel many-to-many speaker identity conversion task.

Conclusions

This paper proposed a non-parallel many-to-many VC method using a VAE variant called an auxiliary classifier VAE (ACVAE). The proposed method has three key features. First, we adopted fully convolutional architectures to construct the encoder and decoder networks so that the networks could learn conversion rules that capture time dependencies in the acoustic feature sequences of source and target speech. Second, we proposed using an information-theoretic regularization for the model training to ensure that the information in the latent attribute label would not be lost in the generation process. With regular CVAEs, the encoder and decoder are free to ignore the attribute class label input. This can be problematic since in such a situation, the attribute class label input will have little effect on controlling the voice characteristics of the input speech. To avoid such situations, we proposed introducing an auxiliary classifier and training the encoder and decoder so that the attribute classes of the decoder outputs are correctly predicted by the classifier. Third, to avoid producing buzzy-sounding speech at test time, we proposed simply transplanting the spectral details of the input speech into its converted version. Subjective evaluation experiments on a non-parallel many-to-many speaker identity conversion task revealed that the proposed method obtained higher sound quality and speaker similarity than the VAEGAN-based method.