Inspired by recent work on neural network image generation which rely on backpropagation towards the network inputs, we present a proof-of-concept system for speech texture synthesis and voice conversion based on two mechanisms: approximate inversion of the representation learned by a speech recognition neural network, and on matching statistics of neuron activations between different source and target utterances. Similar to image texture synthesis and neural style transfer, the system works by optimizing a cost function with respect to the input waveform samples. To this end we use a differentiable mel-filterbank feature extraction pipeline and train a convolutional CTC speech recognition network. Our system is able to extract speaker characteristics from very limited amounts of target speaker data, as little as a few seconds, and can be used to generate realistic speech babble or reconstruct an utterance in a different voice.

Limitations and Future Work

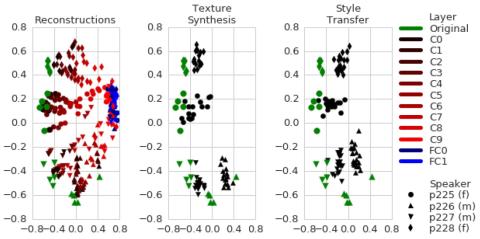

We demonstrate a proof-of-concept speech texture synthesis and voice conversion system that derives a statistical description of the target voice from the activations of a deep convolutional neural network trained to perform speech recognition. The main benefit of the proposed approach is the ability to utilize very limited amounts of data from the target speaker. Leveraging the distribution of natural speech captured by the pretrained network, a few seconds of speech are sufficient to synthesize recognizable characteristics of the target voice. However, the proposed approach is also quite slow, requiring several thousand gradient descent steps. In addition, the synthesized utterances are of relatively low quality.

The proposed approach can be extended in may ways. First, analogously to the fast image style transfer algorithms, the Gram tensor loss can be used as additional supervision for a speech synthesis neural network such as WaveNet or Tacotron. For example, it might be feasible to use the style loss to extend a neural speech synthesis system to a wide set of speakers given only a few seconds of recorded speech from each one. Second, the method depends on a pretrained speech recognition network. In this work we used a fairly basic network using feature extraction parameters tuned for speech recognition. Synthesis quality could probably be improved by using higher sampling rates, increasing the window overlap and running the network on linear-, rather than mel-filterbank features.