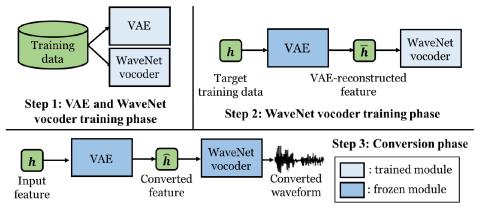

This paper presents a refinement framework of WaveNet vocoders for variational autoencoder (VAE) based voice conversion (VC), which reduces the quality distortion caused by the mismatch between the training data and testing data. Conventional WaveNet vocoders are trained with natural acoustic features but conditioned on the converted features in the conversion stage for VC, and such a mismatch often causes significant quality and similarity degradation. In this work, we take advantage of the particular structure of VAEs to refine WaveNet vocoders with the self-reconstructed features generated by VAE, which are of similar characteristics with the converted features while having the same temporal structure with the target natural features. We analyze these features and show that the self-reconstructed features are similar to the converted features. Objective and subjective experimental results demonstrate the effectiveness of our proposed framework.

Conclusion

In this work, we have proposed a refinement framework of VAE-VC with the WaveNet vocoder. We utilize the self-reconstruction procedure in the VAE-VC framework to fine-tune the WaveNet vocoder to alleviate the performance degradation issue caused by the mismatch between the training phase and conversion phase of the WaveNet vocoder. Evaluation results show the effectiveness of the proposed method in terms of naturalness and speaker similarity. In the future, we plan to investigate the use of VAE-reconstructed features in the multi-speaker training stage of the WaveNet vocoder to further improve the robustness. Speech samples are available at https://unilight.github.io/VAE-WNV-VC-Demo/