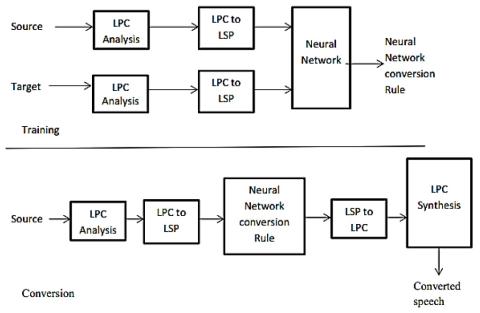

The research presents a voice conversion model using coefficient mapping and neural network. Most previous works on parametric speech synthesis did not account for losses in spectral details causing over smoothing and invariably, an appreciable deviation of the converted speech from the targeted speaker. An improved model that uses both linear predictive coding (LPC) and line spectral frequency (LSF) coefficients to parametrize the source speech signal was developed in this work to reveal the effect of over-smoothing. Non-linear mapping ability of neural network was employed in mapping the source speech vectors into the acoustic vector space of the target. Training LPC coefficients with neural network yielded a poor result due to the instability of the LPC filter poles. The LPC coefficients were converted to line spectral frequency coefficients before been trained with a 3-layer neural network. The algorithm was tested with noisy data with the result evaluated using Mel-Cepstral Distance measurement. Cepstral distance evaluation shows a 35.7 percent reduction in the spectral distance between the target and the converted speech.

Conclusion

This work uses LPC and LSF filter coefficient to underscore the impact of speech parameterization in voice conversion. Losses in the spectral details lead to over-smoothing, thus affecting the quality of the synthesized speech in term of its distance between the target and the converted speech. Neural network and LSF was used to carry out the conversion and result shows an improvement within the range of existing model.