Speech data conveys sensitive speaker attributes like identity or accent. With a small amount of found data, such attributes can be inferred and exploited for malicious purposes: voice cloning, spoofing, etc. Anonymization aims to make the data unlinkable, i.e., ensure that no utterance can be linked to its original speaker. In this paper, we investigate anonymization methods based on voice conversion. In contrast to prior work, we argue that various linkage attacks can be designed depending on the attackers' knowledge about the anonymization scheme. We compare two frequency warping-based conversion methods and a deep learning based method in three attack scenarios. The utility of converted speech is measured via the word error rate achieved by automatic speech recognition, while privacy protection is assessed by the increase in equal error rate achieved by state-of-the-art i-vector or x-vector based speaker verification. Our results show that voice conversion schemes are unable to effectively protect against an attacker that has extensive knowledge of the type of conversion and how it has been applied, but may provide some protection against less knowledgeable attackers.

Conclusion and Future Work

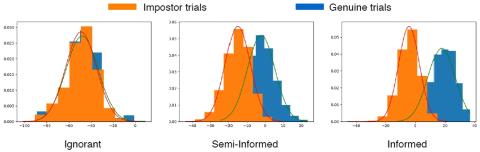

We investigated the use of VC methods to protect the privacy of speakers by concealing their identity. We formally defined target speaker selection strategies and linkage attack scenarios based on the knowledge of attacker. Our experimental results indicate that both aspects play an important role in the strength of the protection. Simple methods such as VTLN-based VC with appropriate target selection strategy can provide reasonable protection against linkage attacks with partial knowledge.

Our characterization of strategies and attack scenarios opens up several avenues for future research. To increase the naturalness of converted speech, we can explore intra-gender VC as well as the use of a supervised phonetic classifier in VTLN. We also plan to conduct experiments with a broader range of attackers and use standard local and global unlinkability metrics to precisely evaluate the privacy protection in various scenarios. More generally, designing a privacy-preserving transformation which induces a large overlap between genuine and impostor distributions even in the Informed attack scenario remains an open question. In the case of disentangled representations, this calls for avoiding any leakage of private attributes into the content embeddings.