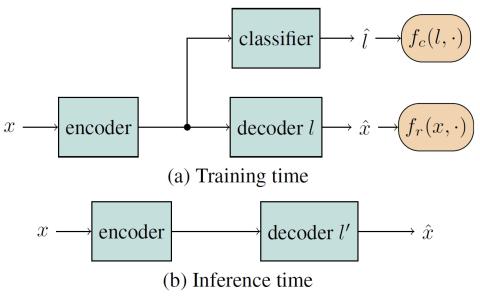

We present a method for converting the voices between a set of speakers. Our method is based on training multiple autoencoder paths, where there is a single speaker-independent encoder and multiple speaker-dependent decoders. The autoencoders are trained with an addition of an adversarial loss which is provided by an auxiliary classifier in order to guide the output of the encoder to be speaker independent. The training of the model is unsupervised in the sense that it does not require collecting the same utterances from the speakers nor does it require time aligning over phonemes. Due to the use of a single encoder, our method can generalize to converting the voice of out-of-training speakers to speakers in the training dataset. We present subjective tests corroborating the performance of our method.

Discussion

We presented a method for voice conversion using neural networks trained on non-parallel data. The method is based on an training multiple autoencoder paths where there is a single speaker-independent encoder and multiple speaker-dependent decoders. The autoencoder paths are trained to minimize the reconstruction error and an adversarial cost that tries to make the output of the encoder carry no information with respect to the speaker id. The training is unsupervised in the sense that we do not require parallel speech dataset from the speakers. We evaluated our method on a subset of speakers from the VCTK dataset. Qualitatively, we observe that the converted spectrograms carry characteristics of the spectrograms of the target speaker. The results of subjective tests corroborate our algorithm’s voice conversion performance. Although our algorithm can convert the voice of the source speaker in the direction of the target, we observe that reconstructed audio has some artifacts. Reducing these artifacts is ongoing work.