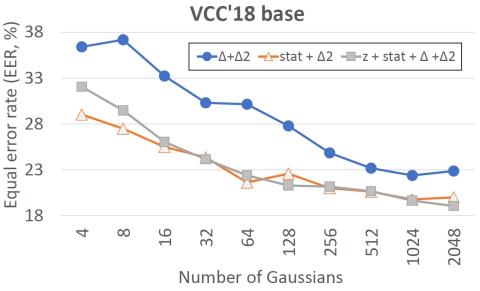

Voice conversion (VC) aims at conversion of speaker characteristic without altering content. Due to training data limitations and modeling imperfections, it is difficult to achieve believable speaker mimicry without introducing processing artifacts; performance assessment of VC, therefore, usually involves both speaker similarity and quality evaluation by a human panel. As a time-consuming, expensive, and non-reproducible process, it hinders rapid prototyping of new VC technology. We address artifact assessment using an alternative, objective approach leveraging from prior work on spoofing countermeasures (CMs) for automatic speaker verification. Therein, CMs are used for rejecting fake' inputs such as replayed, synthetic or converted speech but their potential for automatic speech artifact assessment remains unknown. This study serves to fill that gap. As a supplement to subjective results for the 2018 Voice Conversion Challenge (VCC'18) data, we configure a standard constant-Q cepstral coefficient CM to quantify the extent of processing artifacts. Equal error rate (EER) of the CM, a confusability index of VC samples with real human speech, serves as our artifact measure. Two clusters of VCC'18 entries are identified: low-quality ones with detectable artifacts (low EERs), and higher quality ones with less artifacts. None of the VCC'18 systems, however, is perfect: all EERs are < 30 % (the ideal' value would be 50 %). Our preliminary findings suggest potential of CMs outside of their original application, as a supplemental optimization and benchmarking tool to enhance VC technology.

Conclusion

We have proposed the use of spoofing countermeasure for objective artifact assessment of converted voices as a supplement to the VCC’18 challenge results. Our approach is reference-free and text-independent in the sense that it does not require access neither to the original source speaker waveform nor any text transcripts. It assigns a single EER number to a batch of converted speech utterances from a single VC system, and can therefore be used in comparing different VC systems in terms of their artifacts.

Although the tested countermeasure utilizing CQCC frontend and GMM backend was found to be sensitive to the choices of model parameters and acoustic features, our results indicate clear potential of spoofing countermeasure scores as a convenient and complementary tool to automatically assess the amount of audible and non-audible speech artifacts.

The obtained results are in a reasonable agreement with types of waveform generation methods used for VC systems. Our results indicate that the waveform filtering, SuperVP and Griffin-Lim methods have relatively less artifacts. Since they are not included in the current ASVspoof datasets, we claim that it is also important to create new spoofing materials based on the methods and to train more robust anti-spoofing countermeasures in practice.

We also revealed that perceptually convincing VC samples based on Wavenet in the VCC’18 have detectable artifacts. This implies that the current best VC samples may fool human ears but not necessarily the CM systems. There is no system that would perfectly fool both the humans and CM systems yet.

While our study serves as a proof-of-concept, we foresee several possible future directions. Firstly, it would be interesting to compare the performance to standard objective artifact measures used in assessing speech codecs and speech enhancement methods, and to other spoofing countermeasure frontends besides CQCCs. Some alternative features may provide a stronger correlation to the MOS scores. Second, given the obvious issue related to selection and enriching of the training data with the latest VC techniques it might be relevant to consider one-class approaches that require human training speech only. Even if such approaches have had only moderate success in anti-spoofing it would be interesting to revisit them in the context of artifact assessment.