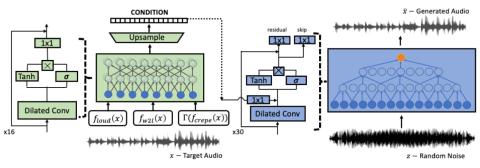

We present a wav-to-wav generative model for the task of singing voice conversion from any identity. Our method utilizes both an acoustic model, trained for the task of automatic speech recognition, together with melody extracted features to drive a waveform-based generator. The proposed generative architecture is invariant to the speaker's identity and can be trained to generate target singers from unlabeled training data, using either speech or singing sources. The model is optimized in an end-to-end fashion without any manual supervision, such as lyrics, musical notes or parallel samples. The proposed approach is fully-convolutional and can generate audio in real-time. Experiments show that our method significantly outperforms the baseline methods while generating convincingly better audio samples than alternative attempts.

Conclusion

We present an unsupervised method that can convert a singing voice to a voice that is sampled either as speaking or singing. The method employs multiple pre-trained encoders and perceptual losses and achieves state of the art results on both objective and subjective measures. Conditioning the generator on a sine-excitation was shown to be beneficial while further improving the results. As future work, we would like to focus on temporal modification of the input singing to further match the style of the target singer.