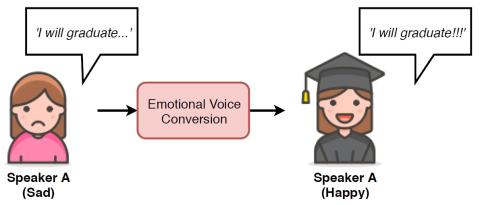

Emotional voice conversion is to convert the spectrum and prosody to change the emotional patterns of speech, while preserving the speaker identity and linguistic content. Many studies require parallel speech data between different emotional patterns, which is not practical in real life. Moreover, they often model the conversion of fundamental frequency (F0) with a simple linear transform. As F0 is a key aspect of intonation that is hierarchical in nature, we believe that it is more adequate to model F0 in different temporal scales by using wavelet transform. We propose a CycleGAN network to find an optimal pseudo pair from non-parallel training data by learning forward and inverse mappings simultaneously using adversarial and cycle-consistency losses. We also study the use of continuous wavelet transform (CWT) to decompose F0 into ten temporal scales, that describes speech prosody at different time resolution, for effective F0 conversion. Experimental results show that our proposed framework outperforms the baselines both in objective and subjective evaluations.

Conclusion

In this paper, we propose a high-quality parallel-data-free emotional voice conversion framework. We perform both spectrum and prosody conversion, that is based on CycleGAN. We provide a non-linear method which uses CWT to decompose F0 into different timing-scales. Moreover, we also study the joint and separate training of CycleGAN for spectrum and prosody conversion. We observe that separate training of spectrum and prosody can achieve better performance than joint training, in terms of emotion similarity. Experimental results show that our proposed emotional voice conversion framework can achieve better performance than the baseline without the need of parallel training data.