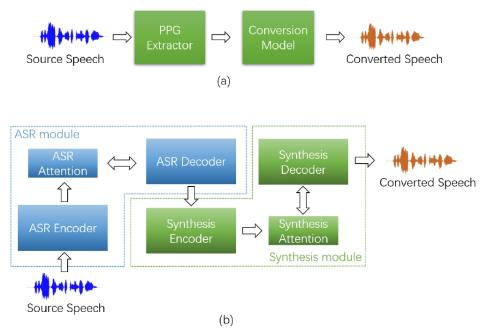

This paper proposes an any-to-many location-relative, sequence-to-sequence (seq2seq), non-parallel voice conversion approach, which utilizes text supervision during training. In this approach, we combine a bottle-neck feature extractor (BNE) with a seq2seq synthesis module. During the training stage, an encoder-decoder-based hybrid connectionist-temporal-classification-attention (CTC-attention) phoneme recognizer is trained, whose encoder has a bottle-neck layer. A BNE is obtained from the phoneme recognizer and is utilized to extract speaker-independent, dense and rich spoken content representations from spectral features. Then a multi-speaker location-relative attention based seq2seq synthesis model is trained to reconstruct spectral features from the bottle-neck features, conditioning on speaker representations for speaker identity control in the generated speech. To mitigate the difficulties of using seq2seq models to align long sequences, we down-sample the input spectral feature along the temporal dimension and equip the synthesis model with a discretized mixture of logistic (MoL) attention mechanism. Since the phoneme recognizer is trained with large speech recognition data corpus, the proposed approach can conduct any-to-many voice conversion. Objective and subjective evaluations show that the proposed any-to-many approach has superior voice conversion performance in terms of both naturalness and speaker similarity. Ablation studies are conducted to confirm the effectiveness of feature selection and model design strategies in the proposed approach. The proposed VC approach can readily be extended to support any-to-any VC (also known as one/few-shot VC), and achieve high performance according to objective and subjective evaluations.

Conclusion

In this paper, we re-design a prior approach to achieve a robust non-parallel seq2seq any-to-many VC approach. The novel approach concatenates a seq2seq phoneme recognizer (Seq2seqPR) and a multi-speaker duration informed attention network (DurIAN) for synthesis. Extension is also made on this approach to enable support of any-to-any voice conversion. Thorough examinations including objective and subjective evaluations are conducted for this model in any-to-many, as well as any-to-any settings.

To overcome the deficiencies of the PPG-based and nonparallel seq2seq any-to-many VC approaches, we further proposed a new any-to-many VC approach, which combines a bottle-neck feature extractor (BNE) with an MoL attention-based seq2seq synthesis model. This approach can easily be extended to any-to-any VC. Objective and subjective evaluation results show its superior VC performance in both any-to-many and any-to-any VC settings. Ablation studies have been conducted to confirm the effectiveness of feature selection and model design strategies in the proposed approach. The proposed BNE-Seq2seqMoL approach has successfully shortened the sequence-to-sequence VC pipeline to contain only an ASR encoder and a synthesis decoder. However, it still uses spectral features (i.e., mel spectrograms) as intermediate representations and relies on an independently trained neural vocoder to generate the waveform. This may reduce the synthesis quality, which be avoided by jointly training the whole VC pipeline in an end-to-end manner (i.e., waveform-to-waveform training). In the future, we will also explore the proposed approach in terms of source style transfer and emotion conversion.